Tackling Quality Assurance With a Small Software Team

Article by Ayana Campbell Smith — May 31, 2022

Quality assurance (which we’ll just call QA from here on out) is a vital part of every application build. It puts the full suite of functionality to the test, checks behaviors, squashes bugs, and gives everyone a chance to sign off on the final result. If you’re a small team with a limited QA budget and lack of QA practitioners, though, how do you approach this key deliverable with the care it deserves?

Like many small development shops, we’ve navigated projects that’ve had to make the most out of every hour. In that experience, we’ve found that QA is best approached as a collaborative and continuous effort.

Communication Breakdowns Become QA Issues

Projects typically follow a common process: research and discovery lead into design and development, then a QA team takes over ahead of launch. Using dedicated specialists for that last step (independent of implementation) works well for a number of reasons:

- As specialists, they’re able to provide well-formed, thorough feedback.

- They offer a fresh set of eyes and an unbiased outside opinion.

- Since there’s separation from implementation, they’re free of the sway sunk cost fallacies have over designers and developers.

It’s not all sunshine and rainbows, though. Software is a combination of many overlapping disciplines, and the final product is a chain of handoffs. Whether it’s from strategy to implementation, or from design to development, each of these handoffs introduces new potential gaps between expectation and reality.

Think of it as a game of telephone. You’re communicating about the intended functionality of an important feature, and each exchange introduces the potential for the final message to be warped past the need. As a result, these gaps all rear up at the end: QA.

By adding an additional team, you’ve introduced another handoff (and another potential gap) — and, by leaving quality checks until the bitter end, major issues can waltz on through to the eleventh hour.

QA is Best Approached Continuously, Not at the End

Fortunately, there is a different approach to QA that yields excellent results in lieu of a dedicated team. One that aligns with our belief that QA isn’t just a final step, but a continuous process that touches every project phase from discovery to launch.

By taking this approach, all team members (that means stakeholders, too) play a role, shifting responsibility from that of a dedicated team to the larger whole. Best of all, adopting integrated QA keeps costs low, team engagement high, and budgets and timelines easier to keep track of.

So how does this play out in real life? For us, it’s a two-part system that’s woven into each project phase:

- During discovery: A documented and agreed-upon set of product features forms the basis for all work to come and details the criteria for final acceptance.

- During implementation: Processes are added to elevate QA from an afterthought to an integrated, shared responsibility.

How Envy Maximizes Quality Without a Dedicated QA Team

An integrated QA process requires a streamlined approach, detailed by the following steps.

Clear Documentation of Expected Behaviors

If your goal is to maximize QA’s value and make everyone a capable contributor (spoiler: it should be), then it’s best to start with a well-documented set of features. A list of requirements may sound like a no-brainer, but the key is to knock this out between discovery/research and the implementation thereafter.

It’s a vital reference point for everyone involved, helping project team members know exactly what features need to be implemented and how they should function. At the same time, this effort often raises previously-unasked questions and highlights important-but-missing functionalities far before they become deadline-breaking surprises. As an added benefit, having a central bit of documentation approved by all parties protects against the dangers of scope creep, a common threat to custom software projects.

Creating a Requirements Document

There’s no shortage of ways to prepare a requirements document these days:

- A wiki, like the ones found in GitHub or Confluence

- A shared document (or series of docs) — Google Docs, Dropbox Paper, etc.

- Issues and project boards in places like GitHub, Asana, or Trello

- Any other place that’s comfortable and allows for shared, iterative content

The big ask: Ensure this document remains relevant over the course of the project. To pull that off, catalog features in a way that each team member will be able to understand, act upon, and provide feedback on during implementation. When done well, this document will not only provide guidance for the implementation phase, but also reflect acceptance criteria for later reviews.

Integrating QA During Implementation

The implementation phase offers the greatest opportunity for integrated QA. As the product begins to take shape through design and development, automated testing and staging environments are core and should be non-negotiable.

Having automation and the means to review facilitates all of this as a team activity. For example, Google’s Lighthouse is a great way to continuously check the overall quality of your product based on audit metrics like performance, accessibility, and SEO. Using Prettier and (language-specific) TypeScript sets code quality standards that would otherwise be left up to personal responsibility. And, implementing continuous integration services like CircleCI and TravisCI to run test suites sets the bar that newly committed features need to clear.

Persistent testing is valuable in that it reveals both the things that work well and those that need improvement long before launch. More importantly, though, is their benefit in offloading time-consuming tasks. As a result, team members are freed up to focus on other priorities.

An Aside on Smaller Releases

Throughout implementation, focusing on and getting specific features up to a staging environment unlocks a good amount of QA potential. Breaking releases down into digestible chunks allows for easier, focused, and regular testing of the application — as opposed to huge updates that may permeate every part of a complex system. Keeping new code focused and frequent starts the cycle.

How Stakeholders Can Pitch In

A careful review of everything is essential. It’s important to walk through flows in their entirety and ensure there are no gaps or dead ends, all while checking for visual correctness and quality. Throughout, keep a running list of anything and everything that needs to be addressed (even the small things).

Here are some of the most important QA issues to look out for:

Reviewing Key Functionality and Core Flows

Does the product function as expected? Answer this baseline question by thoroughly testing the acceptance criteria laid out during discovery. Take time to walk through major flows and reveal any areas where incorrect or incomplete functionality is derailing the experience. As an added bonus, this process helps uncover opportunities for functionality or ease-of-use improvements, as budget and timelines allow.

Oversights and Edge Cases

Assuming functionality has met the acceptance criteria, are there interface or experience shortcomings that should be addressed? At scale, with real content, it’s time for your domain expertise to shine through and point out anything additional that would benefit the application’s demographic. Are there places where more feedback to the user would be helpful? Are there new edge cases to handle, especially early in the product life cycle when data is limited?

Design, too, will still be a thing. Those pixel-perfect approved designs may not look so perfect as placeholder content goes away. Does the platform account for John Smith and something like Chimamanda Ngozi Adichie?

Setting a Quality Bar

Functionality that meets a need should be the core focus of any software project. In the bigger picture, though, it’s only a piece of the end-user satisfaction puzzle. QA should never just stop at “it works.” Instead, place just as much emphasis on ensuring quality standards are met. We include:

- Producing high-quality visual assets

- Adding polish and delight with thoughtful animations

- Making sure user flows are obvious, intuitive, and complete

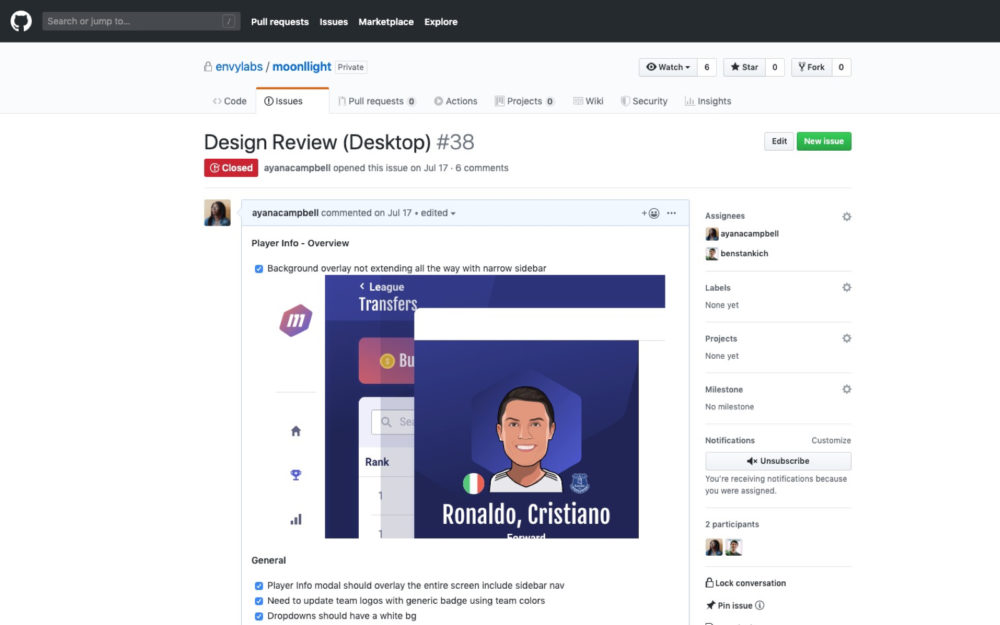

Throughout the review process, QA issues should be triaged (usually with the development team’s help) into actionable tasks. We’re a fan of GitHub Issues in this context. Like most traditional ticketing systems, it provides a means for creating threaded tickets, while also supporting:

- Labels for categorizing and prioritizing

- Project boards for further categorization and status

- Milestones for phased releases and a deeper understanding of status

- Assignments to keep team members informed for their responsibilities and needs

- Checklist formatting to help manage subtasks and punch lists

As feedback is ironed out, make it a point to keep the lines of communication as open as possible — this often involves pairing a stakeholder with an implementation specialist to answer questions, bounce ideas, and make last-minute decisions as needed.

Through integrated QA, automation, and ongoing collaborative review, you create a continuous QA environment in which all team members take a piece of the responsibility pie. Best of all, with this approach, staging can be transitioned to production without much fanfare. Our favorite launches are the boring ones.

Taking an integrated approach to QA is an excellent alternative to working with a dedicated QA team. Despite the tradeoffs that come with not employing QA specialists, using the methods discussed above enable you to pick up many similar benefits — even with a small team and limited resources.

Up Next —

The “Best” Tech Stack Doesn’t Exist

If you’re wondering how to choose the “right” tech stack, you’re asking the wrong question. Learn how to decipher the right tech stack for your particular software goals.

Read this Article →