Event Sourcing 101: The Advantages of Immutable Events

Article by Nathaniel Bibler — Apr 28, 2020

What is event sourcing? Event sourcing is a fundamental change to the way a software application is architected. Nearly all applications today rely on tables in a database to keep track of, share, and persist their current state and information. This approach certainly works and has worked for many years, but it’s not without its drawbacks. An event sourced application accomplishes those same goals, but it instead achieves them by focusing solely on recording all of the activities (or “events”) which have occurred within the application. This approach can avoid many of the problems in traditional systems and unlock many new possibilities.

Traditional vs Event Sourced Applications

Many applications today have a database with a users table. That table is full of current information for all of their known users. When a user changes their name, for example, a record in the users table is changed to match the new value. However, in an event sourced system, no users table exists. Instead, a history of all events that have occurred – like a user was created, their name was changed, their email address changed – are recorded in an ordered list (or an “event log”).

Events which occur in an event source system are sometimes referred to as “facts,” because once they’re written, they are indisputable. They have happened and they cannot be changed. In an event sourced system, events are immutable.

With those events being created, the event sourced application is then written to react or respond to them. Since events are very small snapshots of very focused things that have happened, these event listeners tend to be small, simple pieces of code. Most often, each listener will watch for a single event type to occur and perform a specific function. As a bonus, because they’re small and focused, they tend to be easier to write, test, and debug. An example of an event listener could be that when a user sign-in event occurs, it informs your analytics or marketing engine of who signed in, when they did it, and perhaps how many sign-ins they may have had up to that point.

Avoiding Data Loss with Event Sourcing

Back in the traditional application, those user updates overwrote and lost the now-replaced data. In stark contrast, an event sourced system never incurs data loss; nothing is ever forgotten. There’s no need for an additional audit log or history table that you will often find in a traditional application. By taking the series of events in the event log and replaying them – functionally adding together their changes – an event sourced application can build or rebuild all of the information of the application to any point in time.

An event sourced application can be thought of as a giant, replayable audit log. In fact, you can replay all events to determine the current data (“state”), the state as of an hour ago, or even the state of the application from a month back. Among many other benefits, this greatly improves the developer experience by allowing teams to debug a production issue by seeing exactly what happened, when it happened, and which specific combination of events caused the problem. This is immensely valuable.

Trade-offs of Event Sourcing

Maintaining that single, long list of events is useful. But, reading or querying directly from it may become inefficient as more activities are performed in the system. So, you may elect to periodically record – or “project” in event sourcing terms – the current state of the system. For example, you could playback user-related events to project a table of all current users and their most recent information. This would get back to exactly what you had in the traditional system with the added benefit of knowing every action that has ever occurred to get you to that point.

Projecting these snapshots into tables has other benefits, as well. In a read-heavy application this can greatly improve overall performance. No calculation needs to be done on the read-side. The projections can – and should – pre-calculate necessary values and write their result directly into those projected tables. The read-side of the application then simply pulls the data and presents it just as if it’s reading from a cache.

It’s worth noting that event sourced applications are not without their own complexities. They are not a magic hammer that solves every problem. While powerful, building and maintaining reactionary systems can be confusing and difficult for some teams. It requires a different programming mindset to write applications which react to events rather than simply change data. Further, it requires an understanding of all of the business processes involved and how different inputs or stimuli affect those processes.

Most importantly, because event sourced applications are reactionary, they’re also very likely to be “eventually consistent.” Similar to a microservice architecture, this means that the business has to accept that there may be small windows of time when different parts of the system aren’t quite in-sync. This is because disparate parts of a large system haven’t yet seen or perhaps fully reacted to recent information. Not every business or application is compatible with that reality.

If creating a new application with this new architecture just leads you back to the recreation of that traditional system’s users table, then why go through the event sourcing effort? It may seem trivial or perhaps even wastefully data heavy, but event sourcing is significantly more powerful than what you have in a traditional application. So, let’s look at a few examples of where this approach can shine.

Unique Benefits of Event Sourcing

Businesses need insights to improve and operate more effectively. At some point, every application is burdened with injecting additional tracking and metrics code to serve this need. Whether it’s for system performance, sales insights, or consumer behavior metrics, there’s always a new need for more usage information after a system is built.

In a traditional system, a developer addresses those new needs by adding new code and integrations. The new metrics and data only later become available after the need was identified, services were set up, and new code was added to the application. All of the interesting details that occurred before that point are lost. If the data wasn’t already in the system, it can’t later create it from nothing.

When using event sourcing, all of the data is already in the events themselves. One needs only to look back and reevaluate the events to extract that newly identified information.

This is incredibly powerful. You can go back in time to answer any question. You can generate new information from any series of events which occurred in the past, create data that never previously existed on its own, and tailor current projections to exactly what the business needs today without worry for what it may need tomorrow. Changing projections and adapting to the future is nearly-free. This opens the door to a far greater degree of flexibility, efficiency, and insight into your application and consumers.

Reaping the Benefits of Event Sourcing

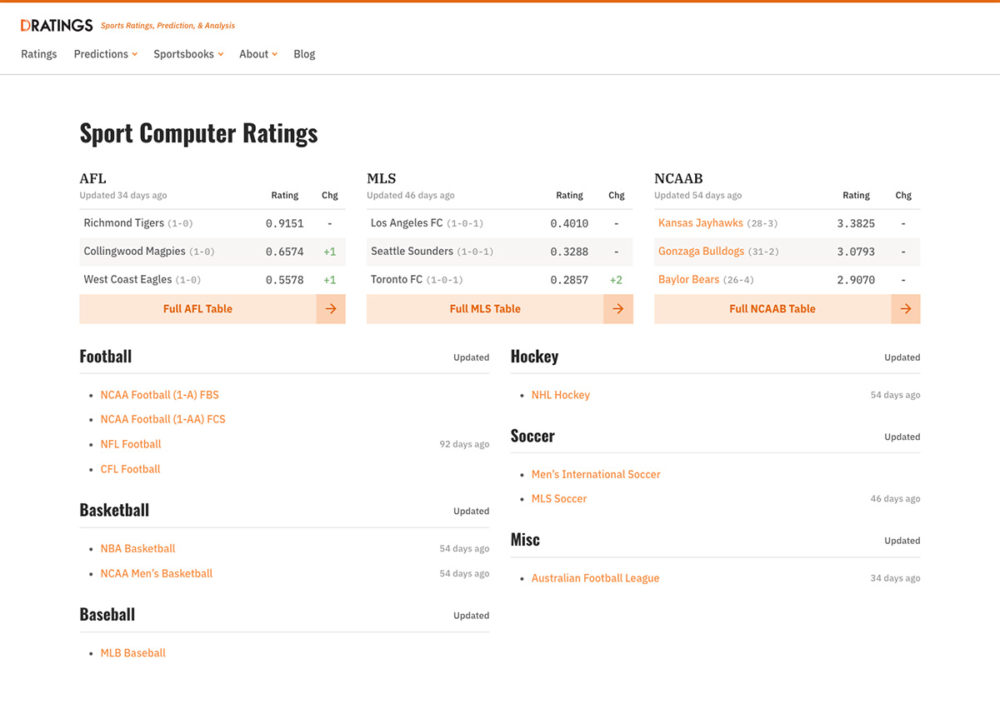

When Envy Labs began redeveloping DRatings, for example, it was identified that sporting event predictions are affected by many factors. These factors may include injuries, referee assignments, event locations, and weather. Many of these factors change and become more accurate as their game times draw more near. After the site had been running for several months we looked back through every game prediction to verify accuracy versus the final game results. Then going one step further, we used the event system to identify how long before a game started those factors stabilized and the predictions became sufficiently accurate and reliable. This new information was available to then be fed back into the prediction algorithms to improve the core functionality of the site and improve its value. If this application weren’t event sourced, this type of retroactive assessment wouldn’t have been possible. Predictions and game factors could not have been rewound and determined for any arbitrary point in time; that information would have been lost and irretrievable.

Where a traditional application can tell you a user’s current name, or the items that make up an order that they’ve placed, the example above highlights how an event sourced system can do much more. Traditional systems can certainly determine the answers to these questions, but the process is very different. Event sourced systems shine here because the answer to any “how did we get here” question is always available; no new code required. Furthermore, the event sourced system answers any future not-yet-thought-of questions you may have and can answer them back to the start of the application.

Event Sourcing Architecture Considerations

It may seem like this approach would require a drastically different server architecture. You may assume that this requires you to forego a traditional database system. Perhaps it needs some new event storage system which you’d have to train and support. But, that’s incorrect.

The event log is simply a time-ordered list of events. This can be persisted anywhere, although different solutions offer their own benefits and tradeoffs. It’s not uncommon that a standard PostgreSQL database is used to store these events. Because the setup is simple and common, this is especially true when an application is just getting launched.

Using PostgreSQL has the benefit of being a well supported and available storage system. It also has the benefit of holding any projected data tables you may desire to create. The database then becomes a one-stop-shop, which simplifies the system’s training, implementation, and maintenance.

Beyond a simple PostgreSQL store, Apache Kafka is most often seen acting as the underlying event storage system. This is because Kafka guarantees message storage, delivery, and ordering. Kafka also manages all connections, load balancing, message distribution, and recovery. Kafka provides a robust, high-throughput persistence and distribution hub for managing event sourced events.

With that in mind, a Kafka-based event sourced setup can be used as a very robust foundation for a microservices system. It enables you to create massively-scalable, interconnected yet decoupled systems. With it, all connected applications are reacting to the same series of events, occurring in the same order, and containing all of the same information. This greatly simplifies communication problems and reduces communication latencies that often occur in microservice architectures.

Going further, once events are shareable across systems, you can test drive new features. Adding a new standalone, purpose-driven or experimental application to meet business or consumer needs is straightforward. The new application need only connect to the Kafka cluster and start listening to the event streams. It could listen for all of the data or just a very limited subset of those events.

An event sourced system, once in place, can be used to test drive and compare new analytics systems. Backfill a new analytics system with all useful events from the beginning of the log. Nearly any new service can be added today as if it had already been running for years. That is simply not often possible with traditional applications.

If event sourcing sounds interesting and you’d like to try it in your next application, then contact us. We’re happy to help you get started, today.

Up Next —

Providing Effective Technical and Creative Feedback as a Product Owner

Giving effective feedback to both technical and creative members of your team can be tricky. Here are some tips for ensuring clear communication.

Read this Article →