Accepting the Ethical Responsibilities Behind Creating Digital Platforms

Article by Nick Walsh — Jan 24, 2020

I spent a few years refereeing youth soccer. Sunburns and comments shouted at me aside, I’ll always remember something an instructor said during the three-day introductory course:

You’ll need to hit the field knowing the rules 100%, and improve from there.

If you haven’t read FIFA’s Laws of the Game, don’t worry — it’s not exactly a page turner. Pulling the topic back into the realm of digital experiences, though, it’s useful to note that the rulebook mentions the spirit of the game nine times:

The Laws cannot deal with every possible situation, so where there is no direct provision in the Laws, The IFAB expects the referee to make a decision within the ‘spirit’ of the game – this often involves asking the question, “what would football want/expect?”

What would football want/expect? That’s a pretty big mandate to place on someone in a bright yellow shirt and tall socks. If we apply it to platform development, however, there’s a direct parallel: The Laws of User Experience can’t cover every possible situation, and the ‘spirit’ of the game comes down to “what would users want/expect?”

Hostile interfaces make a different substitution: What would the bottom line want/expect? When prices are hidden, free trials require a credit card, and more data than actually needed is required, who benefits?

Discussions down this path often point out our industry’s lack of a Hippocratic Oath. Google’s “Don’t be evil” was probably the closest, but it’s in danger of disappearing completely. We’re responsible for applications that have become an integral part of the world’s work and personal lives, but there’s no singular, evenly-applied standard to hold those platforms to. Or even an expectation to put the user first.

Techlash

Hopefully, your personal moral brand — or the ethical code you subscribe to, at least — is drawn to human-centered design. Even if it isn’t, market forces are trending towards its necessity:

- The aptly-named techlash has affected the ability of technology giants like Facebook and Palantir to recruit under growing skepticism.

- Legal requirements are beginning to impact how software is built. This includes the General Data Protection Regulation, California Consumer Privacy Act, and an increase in lawsuits filed against violations of the Americans with Disabilities Act (Title III) and the Rehabilitation Act (Section 508).

Usage statistics correlated with ethics are harder to quantify, but part of user advocacy is the understanding that there often aren’t alternatives. Work, education, peers, or family may dictate that a particular application is used — hence the appeal to responsibility.

As a baseline, let’s look at three avenues of responsibility ethical tech companies must consider: the evil (experience), the legal (accessibility), and the overlooked (performance).

Software Experience Patterns

Of the three categories we’re taking a look at, user experience represents the muddiest of ethical waters. Fumbling on data regulations like HIPAA, PCI DSS, and GDPR can be serious, but the run-of-the-mill hostile interface doesn’t break any laws. You likely run across examples on a daily basis.

Dark Patterns

Dark Patterns describe platform design that actively works against users for the sake of data collection or revenue. These include:

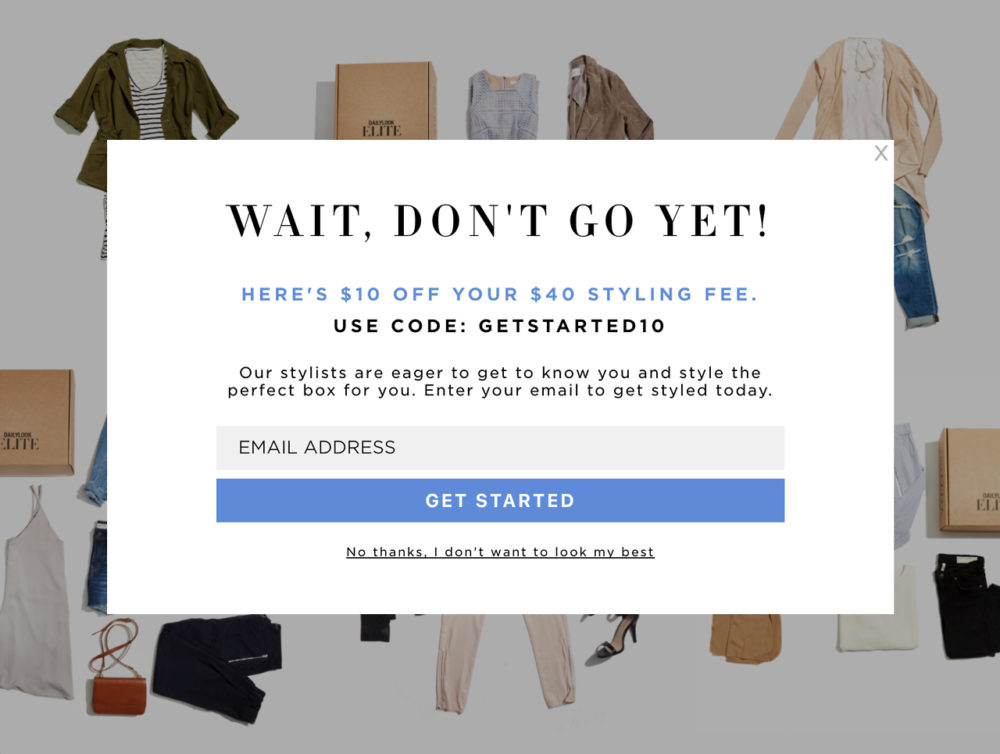

- Confirmshaming, where content attempts to guilt you into taking action. Opting out of a newsletter pop-up may require clicking a tiny link that reads “No thanks, I hate learning.”

- Requiring a phone call to cancel a subscription service.

- Designing advertisements to look like normal content.

- Rigging an application to recommend opioids to doctors in a deal with the drugmaker.

Harking back to the core question (what would users want/expect?), our mandate is pretty clearly against these sorts of tactics. From a personal level too, there’s no faster way to erode brand confidence — I’m not going to resubscribe to a service that placed obstacles in the way of dropping that subscription.

Habit-forming

Creating and rewarding habits is at the core of many applications. Language-learning streaks from Duolingo and reminders from our watches to stand up reinforce positive behaviors tied to self-improvement. We even followed suit in the early days of gamification, making it a core feature of Code School.

With the wrong intentions, however, habits become addictions.

Research is beginning to measure the health impacts behind social media addiction. It’s led to the removal of likes from Instagram, most notably. And a pretty crazy episode of Black Mirror. As the digital age adage goes: If you’re not paying for the service, you are the product.

Optimizing an experience for addiction in the name of ad revenue and eyeballs should sound abhorrent, full stop. We have a responsibility to place mental health over usage statistics.

Digital Abuse

Sites with social and community-building features have long been hotbeds of abuse. In a rush to market, many of the worst offending platforms skip out on reporting features, moderation, and enforced conduct guidelines.

Instead of playing catch-up when it’s deemed “a big enough problem” later, protecting users should be a core deliverable of any digital product, in every phase.

Prioritizing Web Accessibility

I’ve yet to talk software with someone who advocated against accessibility. The difficulty is in deciding budget, time, and just how far to go; there’s never a defined end to the work. It’s important to remember:

- With a million users, a problem that excludes just 2% impacts a stadium full of people.

- As Microsoft points out in their Inclusive Design Toolkit, exclusion can be temporary or situational. An interface that’s friendly towards a one-handed individual is inclusive to people with injuries or holding a baby.

Lawsuits

The Americans with Disabilities Act (Title III) and the Rehabilitation Act (Section 508) form the basis of a rising number of lawsuits. There were 814 filings in 2017, which grew to 2,250 in 2018 according to law firm Seyfarth Shaw LLP. Neither Act outlines specifics for compliance.

Settlements, though, have been pointing to the Web Content Accessibility Guidelines as the standard to meet. Meeting these guidelines is a team effort — each specialization has a role to play, from appropriate color contrast to alternate text for visual media. Like any new way of doing things, hitting a majority of the checklist items in one pass is easier with practice.

Compatibility

We’ve come a long way from requiring pixel perfection on legacy Internet Explorer, and covering a wide array of browsers and devices is more straightforward than ever. Experiences don’t need to be identical, but they should be consistent and usable beyond the equipment that built them.

Just like being locked in to certain applications, users don’t always have much of a choice over what they’re using. Again, percentage points can represent a whole bunch of real people.

The Ethics of Performance

Raise your hand if you’ve suddenly been left without battery or data while absentmindedly browsing the web. (Mine’s in the air.) How platforms perform can’t be lumped into the predatory or illegal buckets, but it’s an oft-forgotten or neglected responsibility affecting:

- Battery usage

- Data consumption against limited data plans

- Input delays and frame rates

The average download size for a web page continues to grow, primarily through an increase in media quality and count. To keep payloads reasonable, we must wait to load things like images and video until they’re actually visible, and size that media to the device. Global products are at the mercy of varying internet quality, and large network requests may exclude entire regions from your offering.

Similarly, animations and effects add experiential flair — at the cost of increased processing overhead. Banning them isn’t necessary or reasonable, but included animations should be optimized, purpose-driven, and scaled to the device’s capabilities (or the user’s preferences).

Motion sensitivity can render a moving interface unusable, so accompanying options should be honored.

Advocate for the User

What would users want/expect?

Beyond a responsibility to people trusting you with a need, their data, and their mental health, laws and regulations are catching up with less-than-subtle suggestions around the how of experience.

Human-centered design is a foundation for ethical tech companies — and a constant source of struggle. It’s easier to argue against the bottom line as a team, but every platform needs at least one user advocate highlighting the ethics behind what we do. Anything that creates something from nothing and fills it with people has the same mandate.

Up Next —

Pairing Custom Software with Industry Standards

If you're embarking upon new software development, weigh the pros and cons of custom software and don’t try to reinvent the wheel if you don’t have to.

Read this Article →